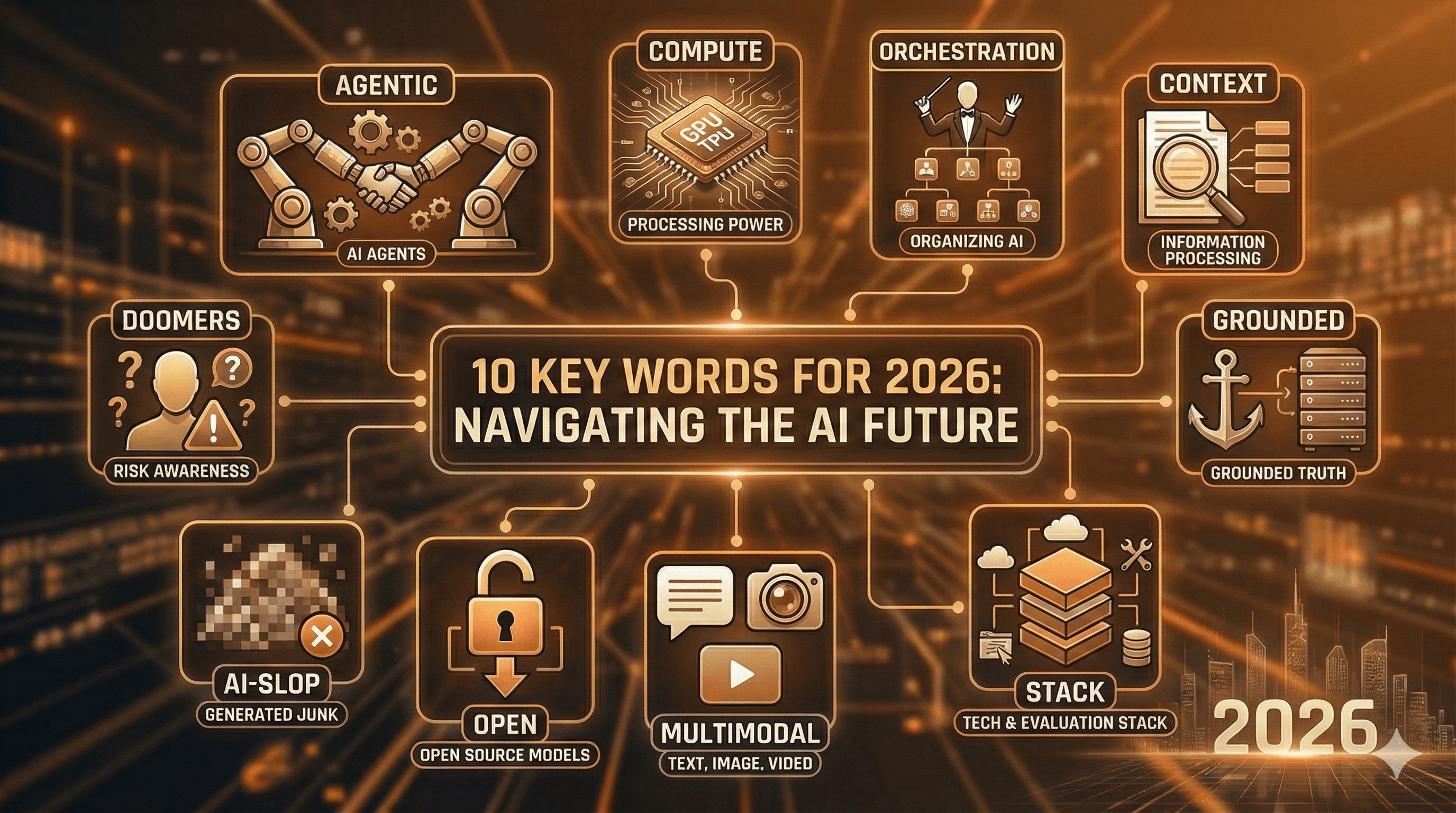

10 Key Words for 2026

In readiness for 2026, here are 10 words which I think are going to be really important, and you want to keep an eye out for.

1 Agentic As well as relating to agents, this also refers to things like Agentic browsers, Agentic AI, and Agentic research, where we're going to use agent-like methods and agents to organise agents to do tasks previously done by humans.

2 Compute Compute describes the power that's required for all of these operations. It means that people are buying new GPUs, it's Google's TPUs, people talk about compute costs, they talk about one of the great limitations of how a new company might grow is its ability to get enough compute. So it's going to be a major factor in 2026.

3 Orchestration In the context of AI orchestration is the ability to organise things. In particular, the ability to organise agents and increasingly as agents get more powerful, they call on other agents. They organise agents, and so we get agent orchestration by agents. At the top of that tree, of course, will be the humans. That is where the human-in-the-loop will be at the top of the orchestration process, not looking at every little piece all the way down the tree.

4 Context In this area, context refers to the amount of information being processed at the same time, expect to see references to context engineering and context stacking – advances from basic prompt engineering.

5 Grounded Grounded or grounded truth refers to your data, your information, things which you hold to be true, to be valid, to have been checked out. AI becomes much more powerful if instead of just using what it's learned from the internet and all of those hallucinations and biases, we can ground the AI in our information, in our data. Then it can be more reliable, more predictable, and more powerful for our particular situation. One example of a popular AI tool that uses grounded information is NotebookLM. NotebookLM takes the data you upload and that is what it's working with, rather than the whole internet, the whole training database.

6 Stack Stack is a tech term that refers to the collection of things you use together. The tech stack (e.g., server language, operating system, various tools) has been your choice. We are going to see in addition the evaluation stack. How do you evaluate the new tools? How do you evaluate synthetic data? How do you evaluate the way that AI is creating questionnaires? How do you evaluate the way that AI is analysing open-ended text? We're going to see references to the research stack, which is a combination of platforms and tools that companies use to accelerate and make more efficient their whole research process?

7 Multimodal LLMs are large language models. The movement now is towards multimodal. More images, more video, more real-world approximations to what is going on. All of this will shift the focus away from this text-based approaches that we've had for the last three years to a much richer environment.

8 Open This is open as in open source and open weights. Models that you can download onto your computer or your servers and run them without them being attached to the internet. This is true of models like Llama from Meta, the Facebook people. It's true from the recent downloads from OpenAI. There are the Gemma models from Google. But many people would say that the leader in this field are the Chinese platforms like Quen and DeepSeek, and that is going to make a bigger impact in 2026.

9 AI-slop AI slop refers to all of the stuff that's being created by AI, the videos, the reviews, the blogs, the fraudulent survey responses. Things which are not real, things which are potentially mischievous. Most people assume now that AI slop will be a much bigger percentage of the internet than stuff that has been created by humans. This has got some really negative potential for life in general, but also the construction of future models, the construction of synthetic data. Because if we try to build them out of AI slop, we're looking for trouble.

10 Doomers I think you're going to see the word Doomers quite a lot in 2026, but I would discourage you from using it. I feel the people that are using this term are trying to close your brain to reasonable arguments. There are real risks in AI. Risks to human rights. Risks to privacy. An enormous risk to the planet and climate change through the colossal power consumption. A risk to the consolidation of power, too much power ending up in one or two countries, too much power ending up in the hands of the tech bros. All of these are real concerns.

Well, there are my ten words. What words would you add to the list for 2026?